Essa Jan

1st Year Masters @ Brown University

I am a first year MS CS student at Brown University, advised by Stephen Bach. In my previous, truly amazing life, I completed my undergrad in CS at the LUMS, where I worked on the alignment of language models and was fortunate to be advised by Dr. Fareed Zaffar, Dr. Yasir Zaki, and Faizan Ahmed.

I am interested in building safer and generalizable AI models. My research interests span several areas within AI safety and model understanding, including reasoning, control mechanisms and the ability of large language models to adapt and improve over time. As these models become increasingly capable, ensuring that they generalize reliably across diverse tasks and contexts is critical for robust and safe deployment. Therefore, I am particularly interested in developing methods to uncover model limitations, support adaptive learning, and steer them toward safer and more aligned behaviour.

News

Research

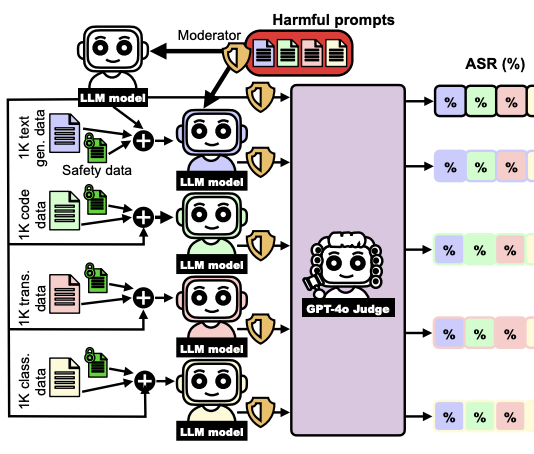

MultitaskBench: Unveiling and Mitigating Safety Gaps in LLMs Fine-tuning

*Essa Jan, *Nouar Aldahoul, Moiz Ali, Faizan Ahmad, Fareed Zaffar, Yasir Zaki

Investigated how fine-tuning on downstream tasks affects the safety guardrails of large language models. We develop a comprehensive benchmark to evaluate safety degradation and the robustness of different safety solution across multiple task domains.

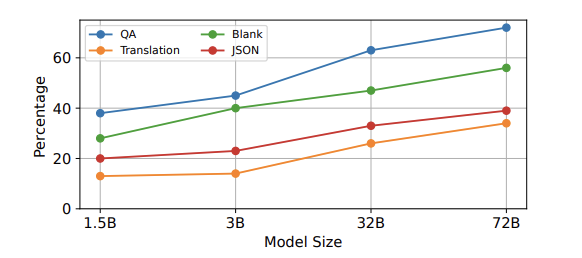

Data Doping or True Intelligence? Evaluating the Transferability of Injected Knowledge in LLMs

*Essa Jan, *Moiz Ali, Fareed Zaffar, Yasir Zaki

We evaluate how LLMs internalize and transfer knowledge injected during fine-tuning through surface-level and deep comprehension tasks. Our study explores whether model size and task demands impact the depth and generalizability of learned knowledge.

YovaCLIP: Multimodal Framework for Forest Fire Detection

Essa Jan, Zubair Anwar, Murtaza Taj

A multimodal pipeline combining vision language models for real-time early detection of smoke and fire in forest environments. Currently deployed across 600+ square kilometer of forest, processed 10,000+ hours of live footage.

Projects

TradeSnap.ai

A LLM based stock trading platform that reduces the entire trading process to a single multilingual conversational interface, significantly lowering barriers to entry for retail traders especially in the developing world.

Urban Power Consumption and Poverty Mapping

Scrapped data and developed ML models to analyze electricity consumption of 3 million consumers, predicting feeder overloads enhanced grid management and identifying poverty hot spots.

SafeNet: AI-Powered Content Moderation

An LLM powered content moderation system to identify and flag harmful/toxic social media content. Built using Kaggle Jigsaw challenge dataset.